Introduction

The Circular Electron Positron Collider (CEPC) [1, 2] is a large-scale collider facility proposed in 2012 after the discovery of the Higgs boson. It has a circumference of 100 km and two interaction points, which allows it to operate at multiple center-of-mass energies. Specifically, it serves as a Higgs factory at 240 GeV [3-6], facilitates W+W- threshold scans at 160 GeV, and functions as a Z factory at 91 GeV [7, 8]. Furthermore, it can be upgraded to 360 GeV for a

The particle identification (PID) of hadrons is crucial in high-energy physics experiments, especially in flavor physics and jet tagging [13]. Particle identification can help suppress combinatorial backgrounds, distinguish between the final states of the same topology, and provide valuable additional information for jet flavor tagging. Future particle physics experiments, such as CEPC, require advanced detector techniques with PID performances that surpass current techniques.

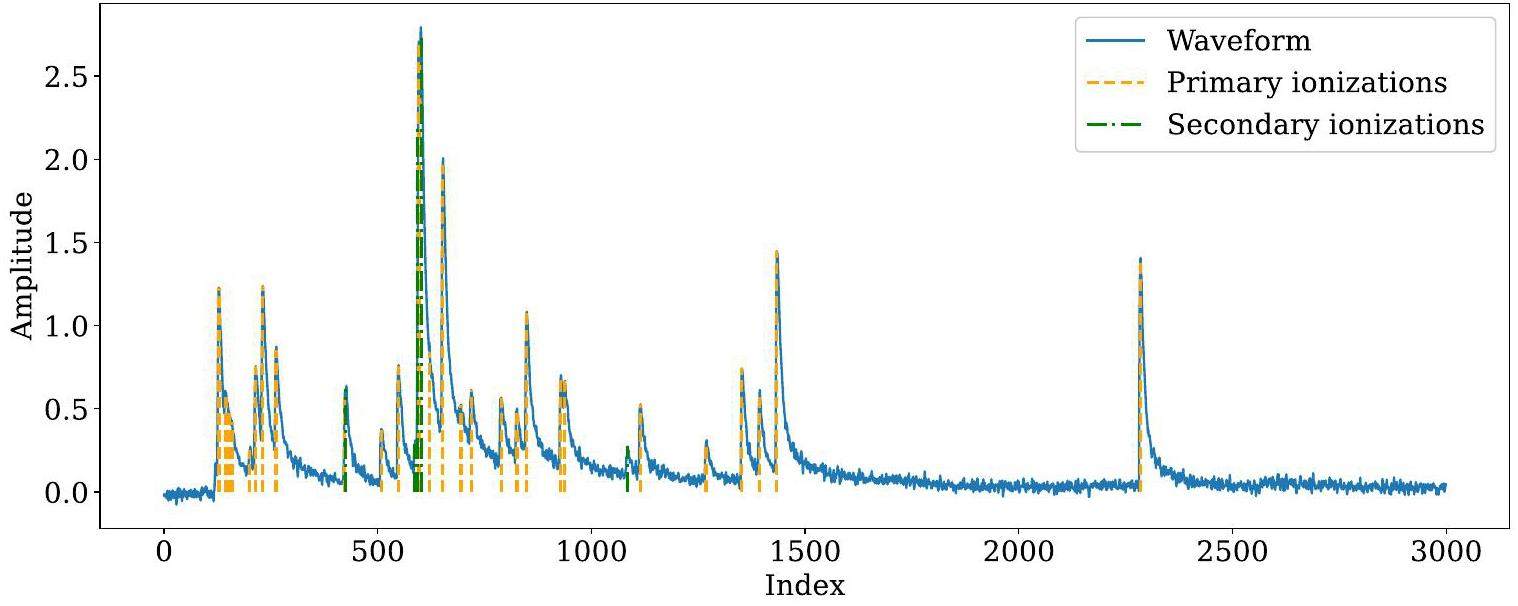

The drift chamber is a key detector in high-energy physical experiments. In addition to charged particle tracking, the drift chamber can also provide excellent PID while requiring almost no additional detector budget. In a drift chamber, PID is based on the ionization behavior of charged particles traversing the working gas. A well-established technique for identifying particles is the measurement of average ionization energy loss per unit length (dE/dx) of charged particles [14]. In a drift chamber cell, charged particles ionize the gas, creating a cascade of electrons that can be detected as primary signals. This type of ionization is called primary ionization and is a Poisson process. Moreover, some of these electrons can cause secondary ionization, leading to a Landau distribution dE/dx. The Landau distribution has an infinitely long tail and large fluctuations that limit the dE/dx-resolution [15]. Figure 1 shows an example signal waveform in a drift-chamber cell.

Alternatively, the cluster-counting technique directly measures the average number of primary ionizations per unit length in the waveforms processed by fast electronics, rather than dE/dx, which reduces the impact of secondary ionization [16] and significantly improves PID performance. The cluster-counting technique has the potential to improve the resolution by a factor of two. Therefore, the cluster-counting technique, which is the most promising breakthrough in PID, has been proposed for future colliders for high-energy frontiers, such as the CEPC and the Future Circular Collider (FCC) [17]. A previous study on cluster counting for the BESIII upgrade demonstrated that the cluster-counting method exhibited superior PID performance compared with the dE/dx method. This significantly enhanced PID performance for π/K, achieving a separation power that is approximately 1.7 times that of the dE/dx-method [18].

Reconstruction poses a significant challenge in cluster counting. An effective reconstruction algorithm must efficiently and accurately determine the number of primary ionizations in a waveform. However, the stochastic nature of ionization processes and the complexity of signals present substantial obstacles to developing reliable cluster-counting algorithms. In traditional methods, cluster-counting algorithms are typically divided into two stages: peak finding (detecting all peaks from both primary and secondary ionizations) and clustering (determining the number of primary ionizations among the detected peaks in the previous step). For derivative-based peak finding, the first and second derivatives of the waveform are computed, and signals are identified via threshold crossings. Unfortunately, derivative-based algorithms often fail to achieve state-of-the-art performance, especially in scenarios with high pile-up and noise levels. In time-based clusterization, the average time differences between signals from different clusters tend to be larger than those within the same cluster. This information can be exploited to design peak-merging algorithms. However, due to the significant overlap in time difference distributions between inter-cluster and intra-cluster signals, these algorithms often suffer from low accuracy. Machine learning (ML) is a rapidly advancing field in computer science that uses algorithms and statistical models to enable systems to improve their performance by learning from data. Neural networks, the most commonly used ML techniques, are computational models loosely inspired by the human brain and consist of interconnected layers. Recurrent neural networks (RNNs) [19] and graph neural networks (GNNs) [20] are particularly popular types of neural networks. ML techniques have been widely applied in high-energy and nuclear physics. For instance, in high-energy physics, the GNN-based ParticleNet algorithm was developed for jet tagging [21], with applications to CEPC jet tagging [22]. In nuclear physics, ML techniques have been used to study phase transitions in nuclear matter governed by quantum chromodynamics (QCD) [23, 24], and to analyze heavy-ion collisions across various energy scales[25-27]. Machine learning has shown preliminary promise for handling large-scale data in high-energy physics. For cluster-counting algorithms, ML can leverage full waveform information and potentially uncover hidden features within the signal peaks. This problem can be modeled as a classification task, making it amenable to mature ML tools such as PyTorch [28] and PyTorch Geometric [29].

This paper presents an ML-based algorithm for cluster counting, optimized for a CEPC drift chamber. The remainder of the paper is organized as follows: Section 2.2 introduces the fast simulation method and the simulated samples used to train and test the ML-based algorithm. Section 3 details the ML-based cluster-counting algorithm. Section 4 evaluates the performance of the ML-based algorithm and compares it with traditional methods. Section 5 concludes the paper.

Detector, Simulation and Data Sets

The CEPC Drift Chamber

In the design of the CEPC 4th conceptual detector, a drift chamber is proposed to be inserted between the silicon inner tracker (SIT) and the silicon external tracker (SET). This chamber primarily provides PID capability and enhances tracking and momentum measurements.

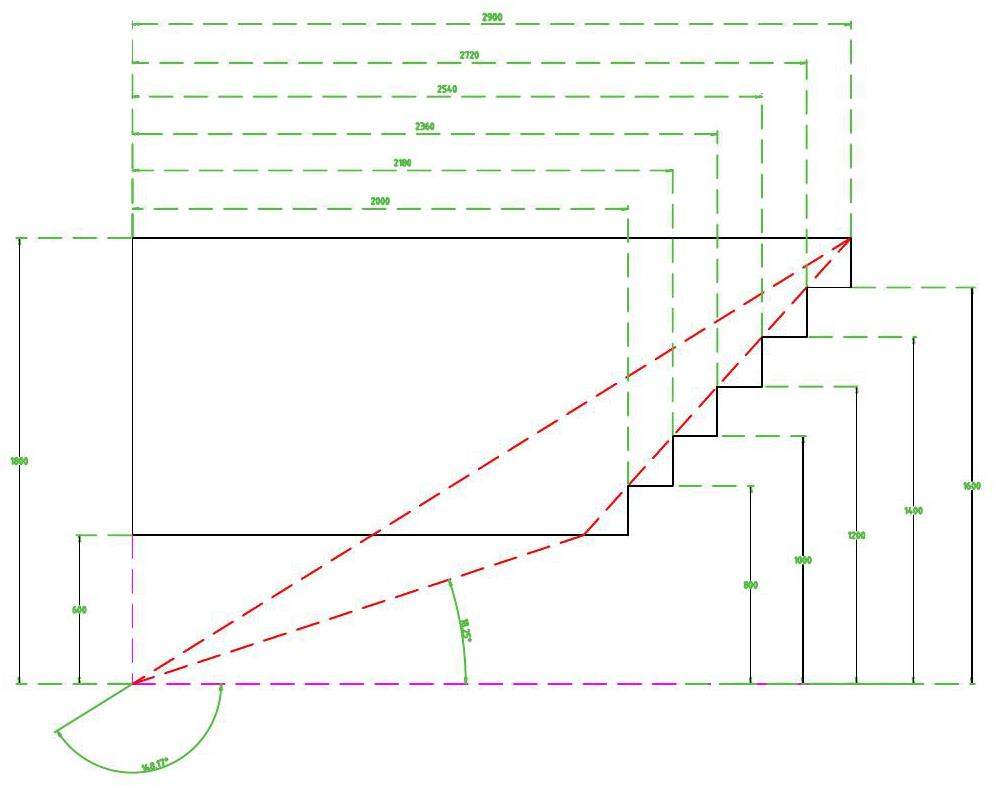

Based on the preliminary design, the chamber length was approximately 5800 mm, with a radial extent ranging from 600 to 1800 mm. The inner wall consisted of a carbon fiber cylinder, while the outer support featured a carbon fiber frame structure comprising eight longitudinal hollow beams and eight rings. These components were sealed with a gas envelope. The aluminum endplates were designed with a multistepped and tilted shape to minimize deformation caused by wire tension. A schematic of the drift chamber is shown in Fig. 2.

The entire chamber comprises approximately 67 layers. To meet the requirements for PID capability and momentum measurements, a cell size of 18 mm × 18 mm was adopted. Each cell consists of a sense wire surrounded by eight field wires, forming a square configuration. The sense wires were 20 μm gold-plated tungsten wires, while the field wires were 80 μm gold-plated aluminum wires. To achieve a suitable primary ionization density, a gas mixture of 90% He and 10% iC4H10 was proposed.

Simulation and Data Sets

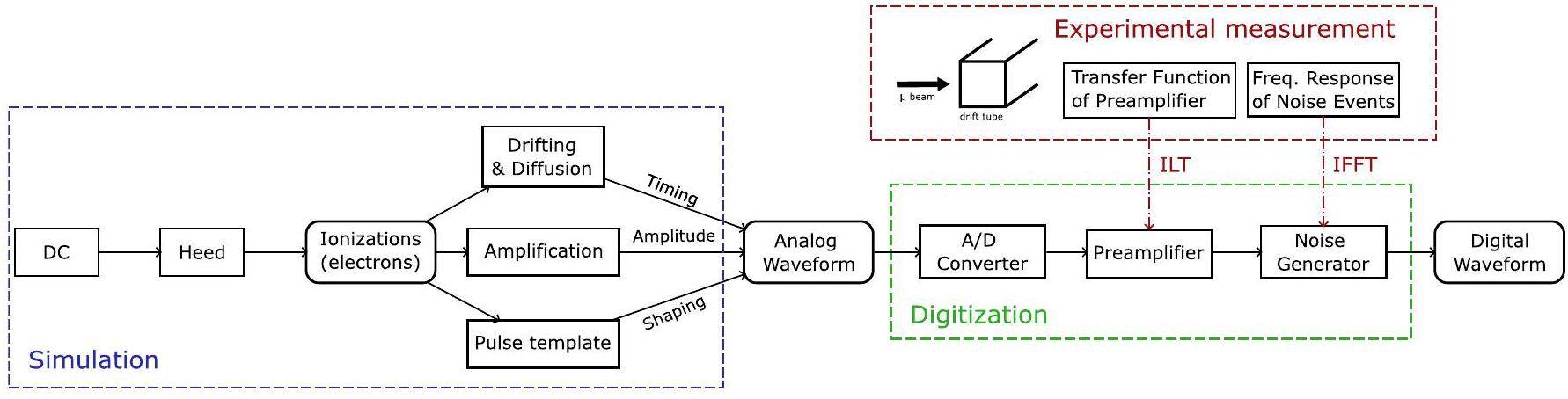

A sophisticated first-principles simulation package was developed for cluster counting. The package precisely simulates particle interactions and detector responses, creating realistic waveforms labeled with MC truth timing, which enables supervised training. The simulation package consisted of two components: simulation and digitization. The geometry of the drift-chamber cells was constructed for the simulation. Ionizations of charged particles were generated using the Heed package. To reduce computational expense, the transportation, amplification, and signal creation processes for each electron were parameterized according to the Garfield++ simulation results, which output analog waveforms for drift chamber cells [30]. Data-driven electronic responses and noise were considered in digitization. The impulse response of the preamplifier was measured experimentally and convoluted with the waveform. Noise was extracted from experimental data using the fast Fourier transform and added to the signal via the inverse fast Fourier transform. The digitization outputs realistic digitized waveforms that exhibit good agreement with experimental data in terms of peak rise times and noise levels. A flowchart of the simulation is presented in Fig. 3.

The simulation geometry is based on the design of the CEPC 4th conceptual detector. According to test beam experiments [31], the waveform exhibits a single-pulse rise time of approximately 4 ns, a noise level of 5%, and a sampling rate of 1.5 GHz. Using the simulation package, MC samples with varying momenta were generated to train and test the neural network algorithm. Detailed information about the samples is presented in Table 1.

| Purpose | Algorithm | Particle | Number of events | Momentum (GeV/c) |

|---|---|---|---|---|

| Training | peak-finding | π± | 5×105 | 0.2-20.0 |

| Testing | peak-finding | π± | 5×105 | 0.2-20.0 |

| Training | Clusterization | π± | 5×105 | 0.2-20.0 |

| Testing | Clusterization | π± | 1×105 × 7 | 5.0/7.5/10.0/12.5/15.0/17.5/20.0 |

| Testing | Clusterization | K± | 1×105 × 7 | 5.0/7.5/10.0/12.5/15.0/17.5/20.0 |

Methodology

Algorithm Overview

An effective reconstruction algorithm for cluster counting must efficiently and accurately determine the number of primary ionizations in a waveform. As introduced in Sect. 1, the cluster-counting algorithm is typically decomposed into two steps: peak finding and clusterization. Peaks from both primary and secondary ionizations were detected, while clusterization discriminates primary ionizations from the peaks detected in the previous step. The traditional peak-finding algorithm uses the first and second derivatives of a waveform [32]. Ionization electron pulses, characterized by a swift rise (mere nanoseconds) and prolonged decay (tens of nanoseconds), yield pronounced derivative values, facilitating peak identification. Higher-order derivatives enhance hidden peak detection but increase noise susceptibility. To mitigate high noise levels, preprocessing with low-pass filters, such as moving averages, is recommended before applying derivatives. For clusterization, a peak-merging algorithm was used. Electrons from a single primary cluster, which are typically spatially localized, exhibit proximate arrival times at the sensing wire, forming discernible clusters in the waveform. Timing information from peak detection aids in distinguishing primary and secondary electron signals. Nonetheless, due to potential overlap between electrons from distinct primary clusters, a precise peak-merging requirement is crucial for the clusterization algorithm.

The aforementioned traditional rule-based algorithms, which depend on incomplete raw hit information and human expertise, often fail to achieve state-of-the-art performance. In contrast, ML-based algorithms harness an abundance of labeled samples for supervised learning, directly extracting intricate data features. In the first step of cluster counting, a long short-term memory (LSTM) network is employed to discriminate between signals and noise. Both the primary and secondary ionization signals are detected during this step. The second step of the algorithm, clusterization, is achieved using a dynamic graph neural network (DGCNN). The DGCNN is used to classify whether a detected peak in the first step originates from primary ionization.

Peak-finding

The peak-finding algorithm identifies all ionization peaks from a waveform. To reduce complexity, waveforms are divided into sliding windows with a window size of 15 data points. For each sliding window, a label is added based on MC truth information. Labels can identify a signal candidate or a noise candidate, defining peak finding as a binary classification.

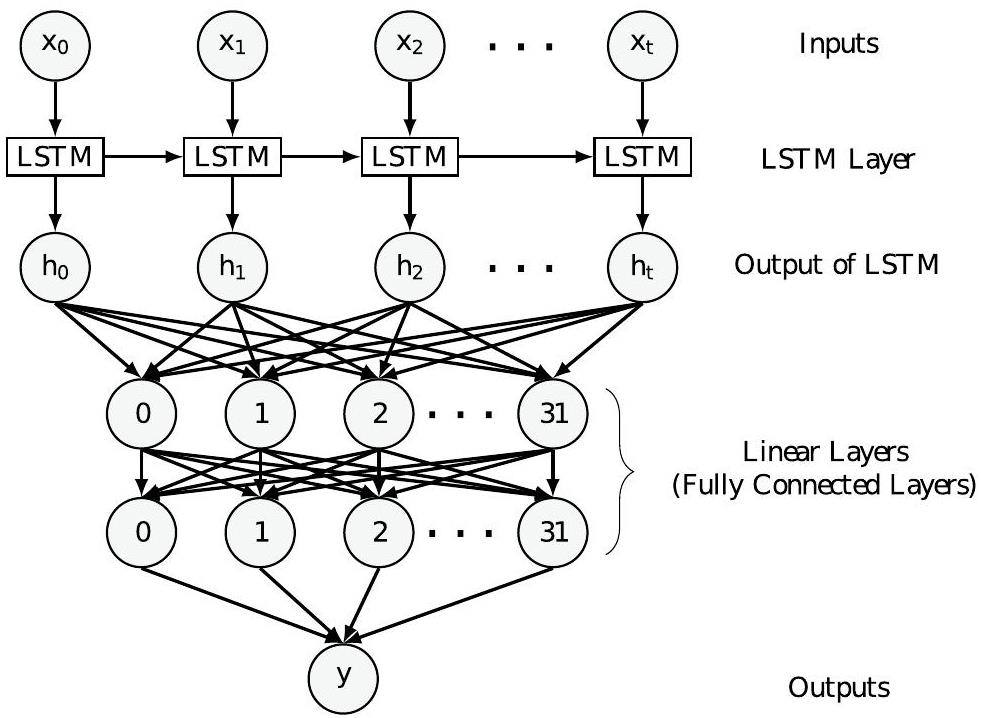

To process time-series data in sliding windows, an LSTM-based network is explored for the peak-finding algorithm. LSTM, a type of recurrent neural network (RNN), can process sequential data and has been successfully used in a range of applications [33]. RNNs are particularly effective for sequence modeling tasks, such as sequence prediction and labeling, because they utilize a dynamic contextual window that captures the entire history of the sequence. However, RNNs face limitations in processing long sequences effectively and are susceptible to issues related to vanishing and exploding gradients [34, 35].

The LSTMs have a unique architecture that includes memory blocks within a recurrent hidden layer. These memory blocks consist of memory cells and forget gates. Memory cells store the temporal state of the network through self-connections, while special multiplicative units, known as gates, regulate information flow. Each memory block includes an input gate to manage input activations in the memory cell, an output gate to control the output flow of cell activations, and a forget gate to scale the internal state of the cell before adding it as an input through self-recurrent connections, thereby adaptively forgetting or resetting the cell's memory [36, 35].

The architecture of the LSTM-based peak-finding algorithm is summarized as follows:

• An LSTM layer

The LSTM layer is used for processing sequential data and capturing long-term dependencies between data points. This LSTM layer has one feature in the input data and 32 features in the hidden state.

• Two linear layers

The neural network model consists of two linear layers that serve as fully connected layers. The first layer has an input size of 32 and an output size of 32. The second layer has an input size of 32 and an output size of 1. A sigmoid activation function [37] is applied to the output of the second layer to produce the final classification result.

Figure 4 illustrates the network structure of the LSTM-based model used to train the peak-finding algorithm. The model was trained using a simulated sample of π mesons, consisting of 5× 105 waveform events with momenta ranging from 0.2 GeV/c to 20 GeV/c. After preprocessing, the data were divided into multiple batches, each with a batch size of 64, and the training process spanned 50 epochs.

Binary cross-entropy loss, a pivotal function for binary classification tasks, quantifies the discrepancy between true labels and predicted probabilities. This function effectively guides the model towards accurate predictions by handling cases where the output is a probability value between zero and one, making it particularly suited for our binary classification task. The Adam optimizer [38] was adopted, with an initial learning rate of 10-4, which was reduced by a factor of 0.5 every 10 epochs. To further enhance algorithm performance, Optuna [39], a hyperparameter optimization framework, was employed to tune parameters such as the learning rate and network size.

Clusterization

After applying the LSTM-based peak-finding algorithm, all ionization signal peaks, including both primary and secondary peaks, were detected. A second algorithm, termed the clusterization algorithm, was then developed to determine the number of primary ionization peaks.

In principle, secondary ionization occurs locally with respect to primary electrons if the primary electrons possess sufficient energy. This proximity causes electrons from a single cluster to appear close together in the waveform, a property that can be exploited to design algorithms for distinguishing between primary and secondary electrons. As mentioned in Sec. 1, traditional algorithms for this purpose rely on combining adjacent peaks.

GNNs, which operate on graph-structured data, are well-suited for handling complex information. The key feature of GNNs is pairwise message passing, where graph nodes iteratively update their representations by exchanging information with their neighbors [40]. For cluster counting, peak timing information is set as the node feature, while edges are initially connected based on timing similarities. GNNs can effectively learn the complex temporal structure of primary and secondary electrons through this message-passing mechanism.

A DGCNN, a specialized type of GNN, was applied to the clusterization algorithm. DGCNNs are designed to learn from the local structure of point clouds, enabling high-level tasks such as classification and segmentation. The edge convolution layer, a critical component of DGCNNs, dynamically computes graphs at each network layer. This layer is differentiable and can be integrated into existing architectures. In this study, the timings of peaks detected during peak finding were represented as a graph. Each peak's timing was encoded as a node feature, while edge distances were defined by the temporal similarity between nodes. Nodes were connected to their kth nearest neighbors (k-NN) [41]. During training, nodes updated their features and connections through message passing, enabling the network to capture hidden local relationships between peaks and achieve better performance in classifying primary and secondary ionizations.

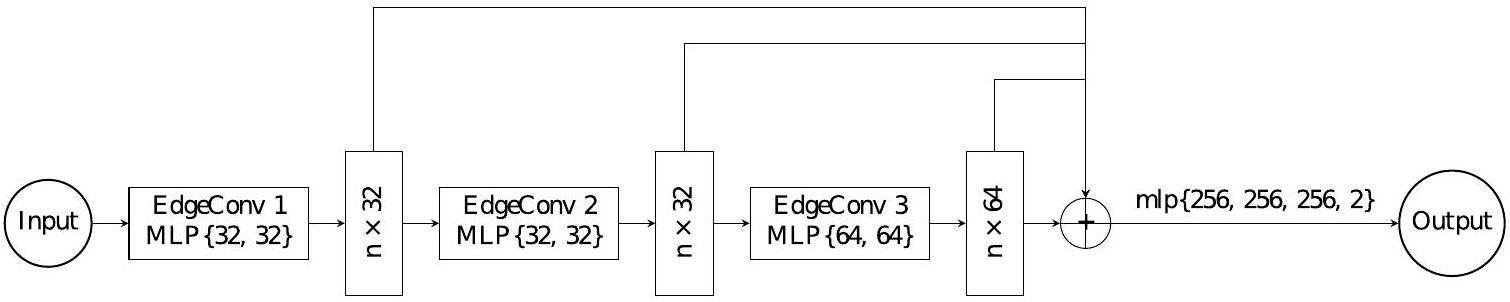

The neural network architecture of the clusterization algorithm is summarized as follows:

• Three dynamic edge convolution layers

Three dynamic edge convolution layers process graph-structured data by dynamically creating edges between each node and its neighboring nodes, thereby capturing local information. A new graph is generated at each layer of the GNN based on the k-NN approach [42]. The multi-layer perceptrons within the dynamic edge convolution layers map the number of input channels to the number of output channels. The features from three dynamic edge convolution layers were concatenated to get outputs with 32+32+64=128 dimensions.

• A 4-layer multi-layer perceptron (MLP)

Multi-layer Perceptron (MLP) is a type of feedforward neural network that consists of multiple layers of neurons connected in a sequential manner [43]. This 4-layer MLP takes the concatenated output of the dynamic edge convolution layers as input. It has three hidden layers each with 256 neurons and 1 output layer with 2 channels. The dropout rate is set to 0.5, indicating that during training, each neuron in the network will have a 50% probability of being randomly dropped in order to prevent overfitting and encourage the network to learn more robust features. Finally, the model applies a log-softmax activation function to the output of the MLP and returns the classification probabilities.

Figure 5 illustrates the neural network architecture for clusterization. The model was trained using a pion sample containing 5×105 waveform events with momenta ranging from 0.2 GeV/c to 20 GeV/c. After preprocessing, the data were divided into multiple batches, each with a batch size of 128, and training was conducted over 100 epochs.

For this binary classification model, the negative log-likelihood loss function and the Adam optimizer were adopted, with an initial learning rate of 10-3, which was reduced by a factor of 0.5 every 10 epochs. Hyperparameters, including the sizes of the three MLPs in the dynamic edge convolution layers and the MLP serving as a fully connected layer, were tuned using Optuna. The value of k in k-NN, which determines how dynamic edge convolution layers establish relationships between nodes and their k nearest neighbors, was optimized to 4.

Performance

The two-step model was trained using supervised learning on a large number of waveform samples. To evaluate the model's generalization performance, it was applied to test samples.

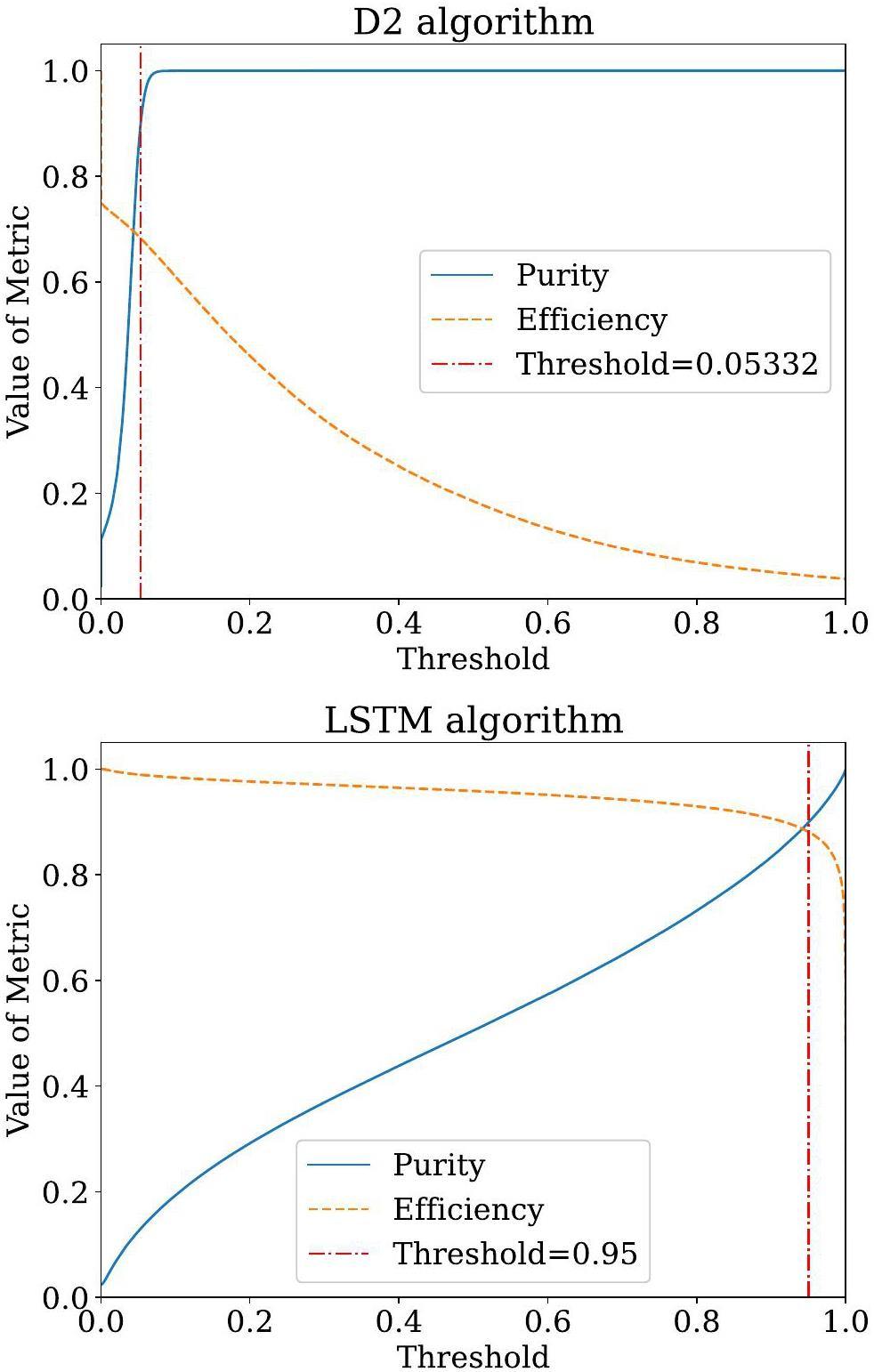

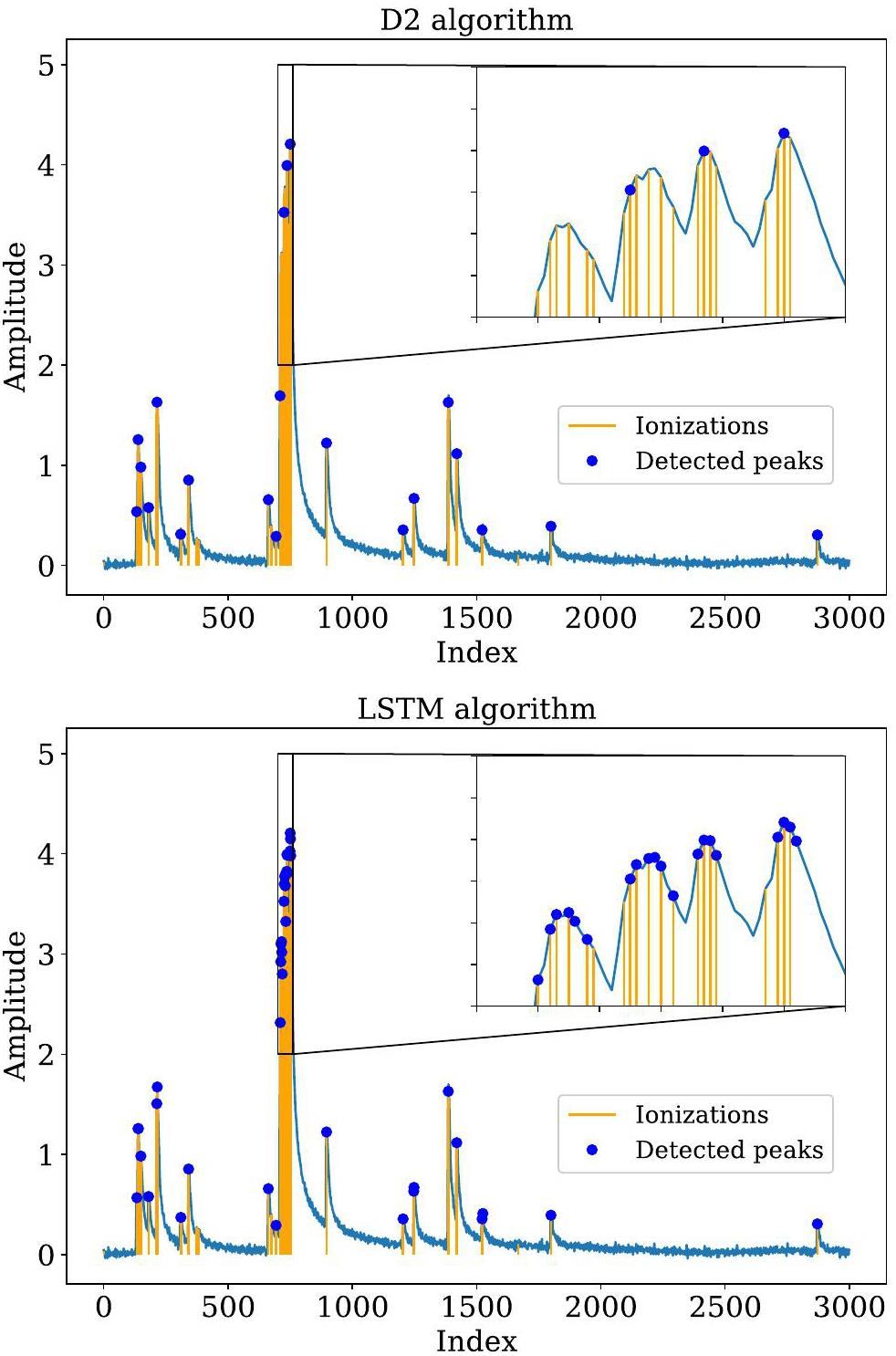

For the peak-finding algorithm, both the LSTM-based algorithm and a traditional second-derivative-based (D2) algorithm served as classifiers. Their performance was evaluated using standard classifier metrics, including precision (purity) and recall (efficiency). Purity and efficiency are defined in terms of true positives (TP), false positives (FP), and false negatives (FN) [44], as shown in Eq. (1):

| Purity | Efficiency | |

|---|---|---|

| LSTM algorithm | 0.8986 | 0.8820 |

| D2 algorithm | 0.8986 | 0.6827 |

The clusterization algorithm was applied after peak finding to determine the number of primary clusters from the detected peaks. After implementing both the LSTM-based peak-finding and DGCNN-based clusterization algorithms, the number-of-cluster distribution for a charged particle was obtained, enabling the calculation of separation power for different types of charged particles. In this study, clusterization was achieved by performing node classification in the DGCNN. To achieve optimal performance, the classifier threshold was tuned to maximize the K/π-separation power. The K/π-separation power is defined as

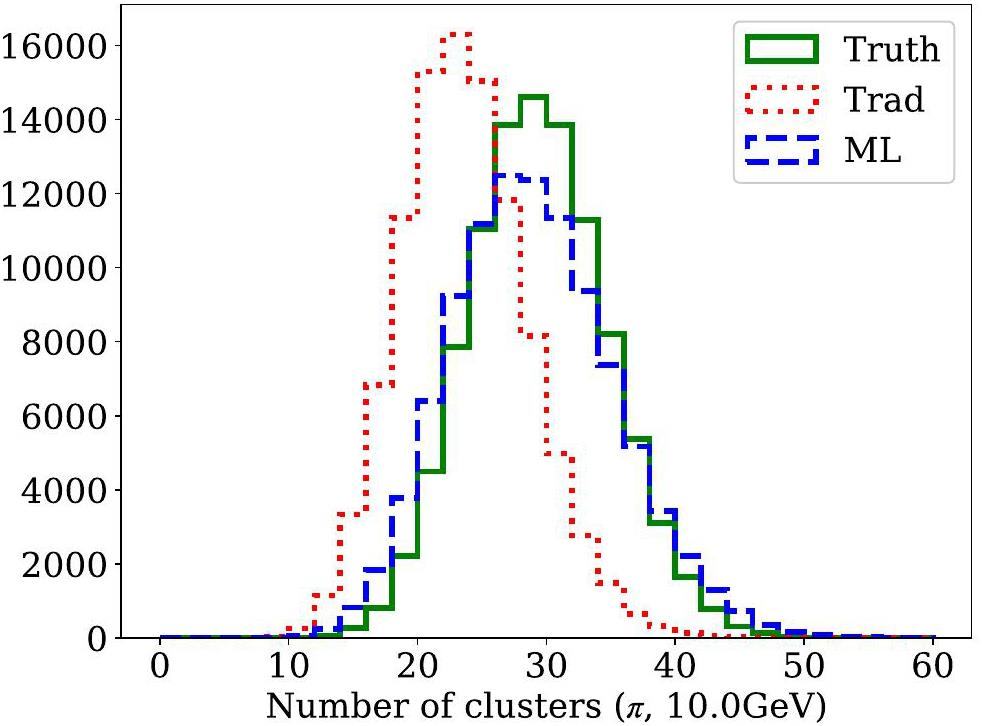

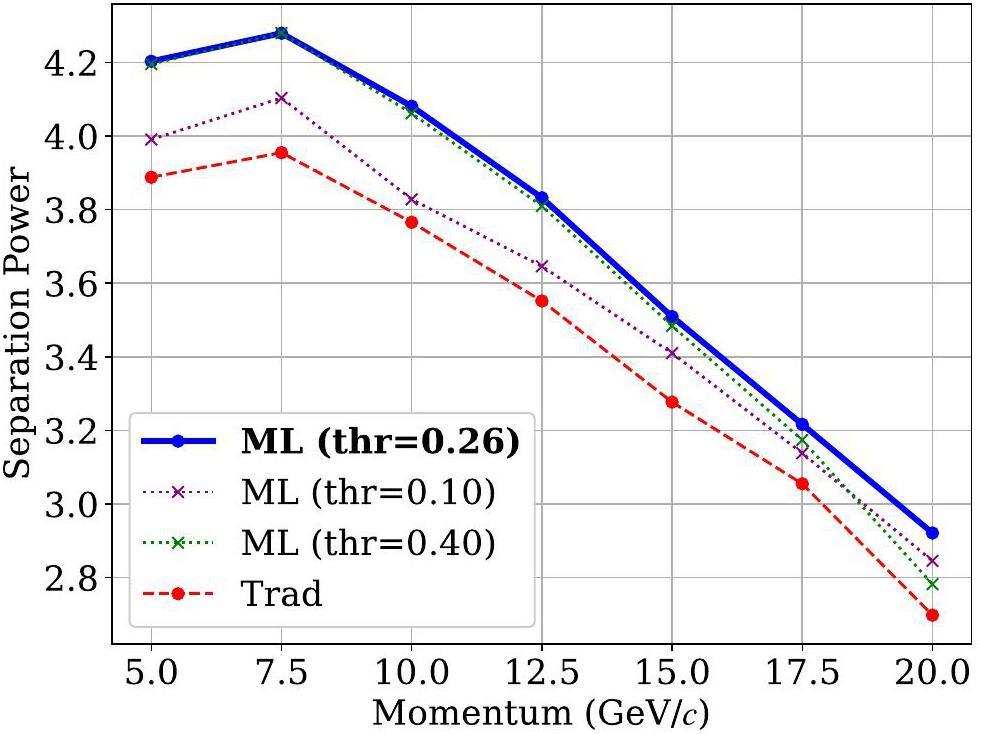

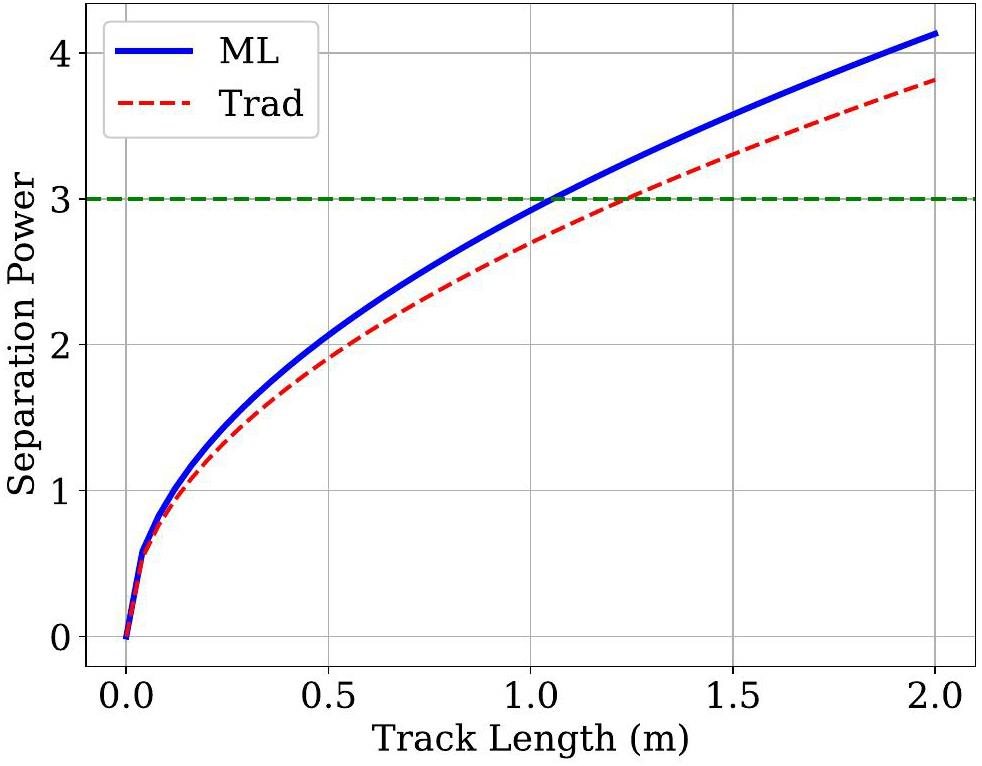

Using the optimized threshold, Fig. 8 compares the number-of-cluster distributions derived from the MC truth, the traditional algorithm, and the DGCNN-based algorithm. The mean value of the number-of-cluster distribution obtained from the ML-based algorithm closely aligns with the MC truth, demonstrating that the ML-based algorithm achieves higher efficiency than traditional approaches. Figure 9 presents the K/π separation powers at various momenta for a track length of 1 m using different algorithms. The ML-based cluster-counting algorithm shows approximately a 10% improvement in separation power across all momenta compared to traditional methods. Since separation power scales with the square root of the track length, this performance improvement corresponds to an effective increase of about 20% in the detector radius when using the traditional algorithm. This enhancement could significantly reduce the overall cost of the detector. Detailed numerical results are listed in Table 3. Additionally, K/π separation power for a track with

| Algorithm | Metric | Momentum (GeVc) | ||||||

|---|---|---|---|---|---|---|---|---|

| 5.0 | 7.5 | 10.0 | 12.5 | 15.0 | 17.5 | 20.0 | ||

| ML-based algorithm | π± efficiency | 1.003 | 1.001 | 0.999 | 0.999 | 0.998 | 0.998 | 0.999 |

| K± efficiency | 1.014 | 1.011 | 1.010 | 1.008 | 1.006 | 1.004 | 1.003 | |

| K/π separation power | 4.203 | 4.279 | 4.081 | 3.832 | 3.509 | 3.216 | 2.921 | |

| Traditional algorithm | π± efficiency | 0.814 | 0.808 | 0.803 | 0.801 | 0.801 | 0.800 | 0.800 |

| K± efficiency | 0.837 | 0.830 | 0.824 | 0.820 | 0.817 | 0.814 | 0.812 | |

| K/π separation power | 3.888 | 3.954 | 3.765 | 3.550 | 3.277 | 3.054 | 2.697 | |

Conclusion

In this study, we developed a cluster-counting algorithm that incorporates both peak-finding and clusterization algorithms based on ML. Our approach offers several advantages over traditional cluster-counting methods. In particular, our peak-finding algorithm demonstrated better efficiency than the derivative-based algorithm. The clusterization algorithm provides a Gaussian-distributed number of clusters and achieves an efficiency close to that of the ground truth (MC truth). The entire cluster-counting algorithm outperformed traditional methods, showing a 10 % improvement in the K/π separation power. This level of PID performance with ML-based algorithms is approximately equivalent to having a 20% larger detector size than traditional algorithms. With such performance, the current design of the CEPC drift chamber meets the necessary PID requirements. Furthermore, the critical role of ML-based algorithms in cluster counting suggests their potential applications in future high-energy physics experiments.

CEPC Technical Design Report - Accelerator

. (2023). https://doi.org/10.48550/arXiv.2312.14363.CEPC conceptual design report: volume 2 - physics & detector

. (2018). https://doi.org/10.48550/arXiv.1811.10545.Precision Higgs physics at the CEPC

. Chin. Phys. C 43,Higgs to ττ analysis in the future e+ e- Higgs factories

. (2019). https://doi.org/10.48550/arXiv.1903.12327.Measurements of decay branching fractions of H→bb¯/cc¯/gg in associated (e+e−/μ+μ−)H production at the CEPC

. Chin. Phys. C 44,Search for invisible decays of the Higgs boson produced at the CEPC

. Chin. Phys. C 44,Data-taking strategy for the precise measurement of the W boson mass with a threshold scan at circular electron positron colliders

. Eur. Phys. J. B 80, 66 (2020). https://doi.org/10.1140/epjc/s10052-019-7602-x.Electroweak physics at CEPC

. Int. J. Mod. Phys. A 34,CEPC-SPPC accelerator status towards CDR

. Int. J. Mod. Phys. A 32,CEPC and SppC Status—From the completion of CDR towards TDR

. Int. J. Mod. Phys. A 36,Analysis of Bc→τντ at CEPC

. Chin. Phys. C 45,Analysis of Bs→ϕνν¯ at CEPC

. Phys. Rev. D 105,Requirement analysis for dE/dx measurement and PID performance at the CEPC baseline detector

. Nucl. Instrum. Meth. A 1047,The use of multiwire proportional counters to select and localize charged particles

. Nucl. Instr. and Meth. 62, 262-268 (1968). https://doi.org/10.1016/0029-554X(68)90371-6.The Time Expansion Chamber and Single Ionization Cluster Measurement

. IEEE Trans. Nucl. Sci. 26, 73-80 (1979). https://doi.org/10.1109/TNS.1979.4329616.FCC-ee: The Lepton Collider

. Eur. Phys. J. Spec. Top. 228, 261-623 (2019). https://doi.org/10.1140/epjst/e2019-900045-4.Simulation study of particle identification using cluster counting technique for the BESIII drift chamber

. J. Instrum. 18,Fundamentals of recurrent neural network (RNN) and long short-term Memory (LSTM) network

. Phys. D: Nonlinear Phenom. 404,Graph neural networks: A review of methods and applications

. AI Open 1, 57-81 (2020). https://doi.org/10.1016/j.aiopen.2021.01.001.Jet tagging via particle clouds

. Phys. Rev. D 101,ParticleNet and its application on CEPC jet flavor tagging

. Eur. Phys. J. C 84, 152 (2024). https://doi.org/10.1140/epjc/s10052-024-12475-5Phase Transition Study Meets Machine Learning

. Chin. Phys. Lett. 40,Application of machine learning to the study of QCD transition in heavy ion collisions

. Nucl. Tech. 46,High energy nuclear physics meets machine learning

. Nucl. Sci. Tech. 34, 88 (2023). https://doi.org/10.1007/s41365-023-01233-zMachine learning in nuclear physics at low and intermediate energies

. Sci. China-Phys. Mech. Astron. 66,Studies on several problems in nuclear physics by using machine learning

. . 46,PyTorch: An Imperative Style, High-Performance Deep Learning Library

. Adv. Neural Inf. Process Syst. 32, 8024-8035 (2019). https://doi.org/10.48550/arXiv.1912.01703.Fast graph representation learning with PyTorch Geometric

. in ICLR Workshop on Representation Learning on Graphs and Manifolds (2019). https://doi.org/10.48550/arXiv.1903.02428.Interfacing Geant4, Garfield++ and Degrad for the simulation of gaseous detectors

. Nucl. Instr. and Meth. A 935, 121-134 (2019). https://doi.org/10.1016/j.nima.2019.04.110.Particle identification with the cluster counting technique for the IDEA drift chamber

. Nucl. Instr. and Meth. A 1048,Peak finding algorithm for cluster counting with domain adaptation

. Comput. Phys. Commun. 300,Long short-term memory

. Neural Comput. 9, 1735-1780 (1997). https://doi.org/10.1162/neco.1997.9.8.1735.Learning long-term dependencies with gradient descent is difficult

. IEEE Trans. Neural Netw. 5, 157-166 (1994). https://doi.org/10.1109/72.279181.A review of recurrent neural networks: LSTM cells and network architectures

, Neural Comput. 31, 1235-1270 (2019). https://doi.org/10.1162/neco_a_01199Learning to forget: Continual prediction with LSTM

, Neural Comput. 12, 2451-2471 (2000). https://doi.org/10.1162/089976600300015015Adam: A method for stochastic optimization

. Proceedings of the 3rd International Conference on Learning Representations (2015). https://doi.org/10.48550/arXiv.1412.6980Optuna: A Next-generation Hyperparameter Optimization Framework

. Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 2623-2631 (2019). https://doi.org/10.48550/arXiv.1907.10902Neural message passing for quantum chemistry

, Proceedings of the 34th International Conference on Machine Learning 70, 1263-1272 (2017). https://doi.org/10.48550/arXiv.1704.01212Dynamic graph CNN for learning on point clouds

. ACM Trans. Graph. 38 (2019). https://doi.org/10.1145/3326362.Introduction to machine learning: k-nearest neighbors

. Ann. Transl. Med. 4 (2016). https://doi.org/10.21037/atm.2016.03.37.Approximation theory of the MLP model in neural networks

. Acta Numer. 8, 143-195 (1999). https://doi.org/10.1017/S0962492900002919.A review on evaluation metrics for data classification evaluations

. Int. J. Data Min. Knowl. Manag. Process 5, 1 (2015). https://doi.org/10.5121/ijdkp.2015.5201.The authors declare that they have no competing interests.